Introduction

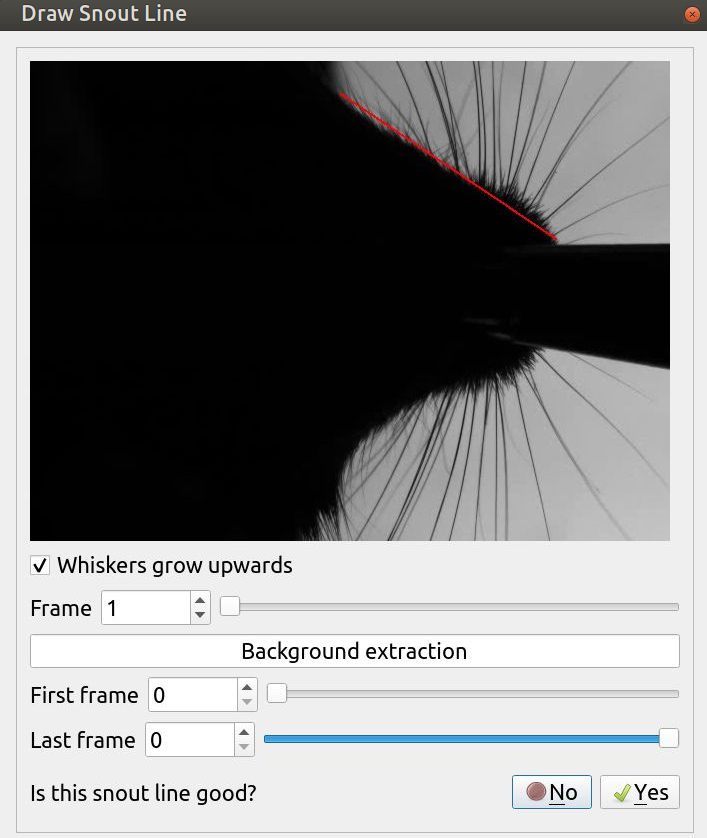

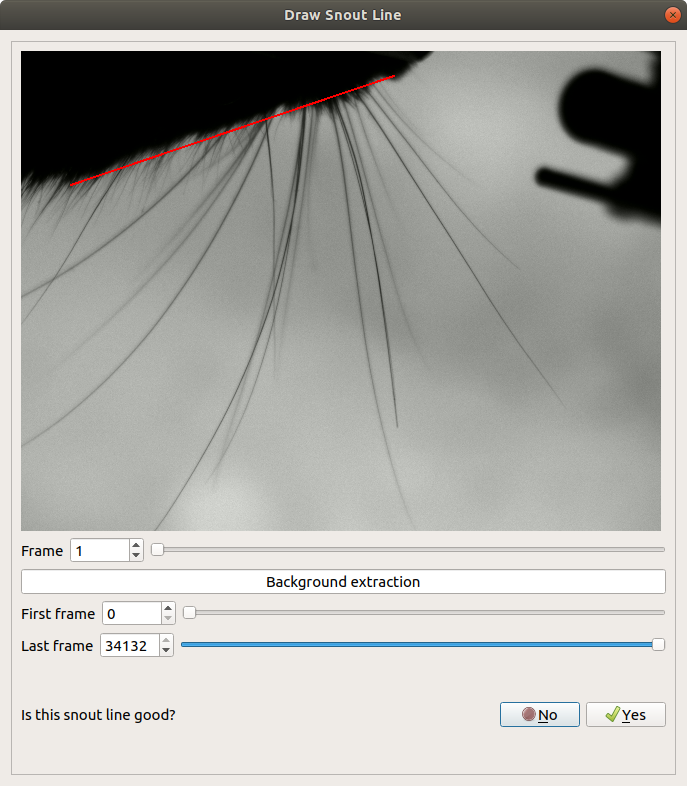

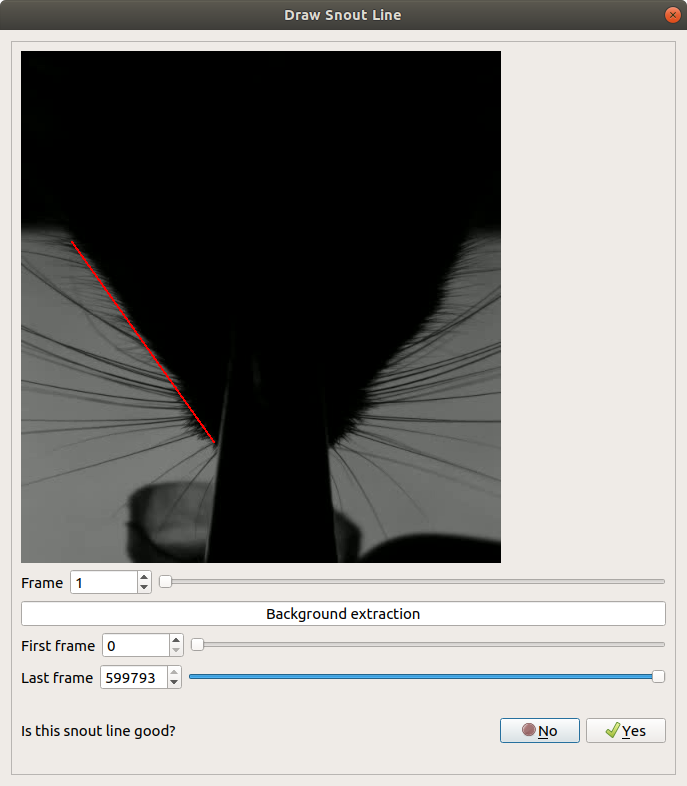

The rodent whisker system is a prominent experimental subject for the study of sensorimotor integration and active sensing. As a result of improved video-recording technology and progressively better neurophysiological methods, there is now the prospect of precisely analyzing the intact vibrissal sensori motor system. The vibrissae and snout analyzer (ViSA), also noted as BWTT, a widely used algorithm based on computer vision and image processing, has been proven successful for tracking and quantifying rodent sensorimotor behaviour, but at a significant cost in processing time.

Unfortunately, the ViSA processing rate lags far behind the data-generation rate of modern cameras. The acceleration of the whisker-tracking algorithm could speed up behavioural and neurophysiological research considerably. It could also become the cornerstone for supporting online whisker tracking, which shall not only eliminate the need for maintaining large storage to keep raw videos but shall also allow novel experimental paradigms based upon real-time behaviour.

Stage one – Offline Processing

Our experimental results indicate that the optimal solution for an offline implementation of ViSA is currently the OpenMP-based CPU execution. By using 16 CPU threads, we achieve more than 4,500x speedup compared to the original Matlab serial version, resulting in an average processing latency of 1.2 ms/frame, which is a solid step towards real-time (and online) tracking. Analysis shows that running the algorithm on a 32-thread-enabled machine can reduce this number to 0.72 ms/frame, thereby enabling real-time performance. This will allow direct interaction with the whisker system during behavioural experiments.

Stage two – Online Processing

There is strong experimental interest in the online tracking of live subjects. For the online mode, the recording device will create batches of 1K-frame images each second and stream them into our processing system. The initial thought was to only use DFEs to make use of their advantage in stream processing. However, evaluation of the C-DFE accelerated version indicates that DFE is not able to provide sufficient hardware resources to satisfy the online processing goal, i.e. the FPGA runs out of resources. On the other hand, the OMP-accelerated version has a processing speed that is adequate for real-time processing. Therefore, a strategy to instigate a powerful multi-core CPU will be enough for the online-processing requirement. The recording facility will generate batches of images and transfer them to the work node through Ethernet cables. Then, the OMP-accelerated version will process the input batch of frame images and generate output that will be sent out through Ethernet.