Introduction

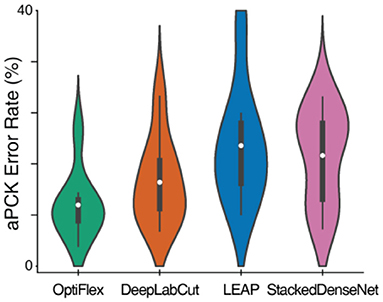

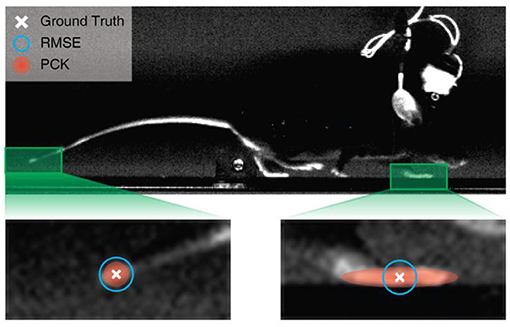

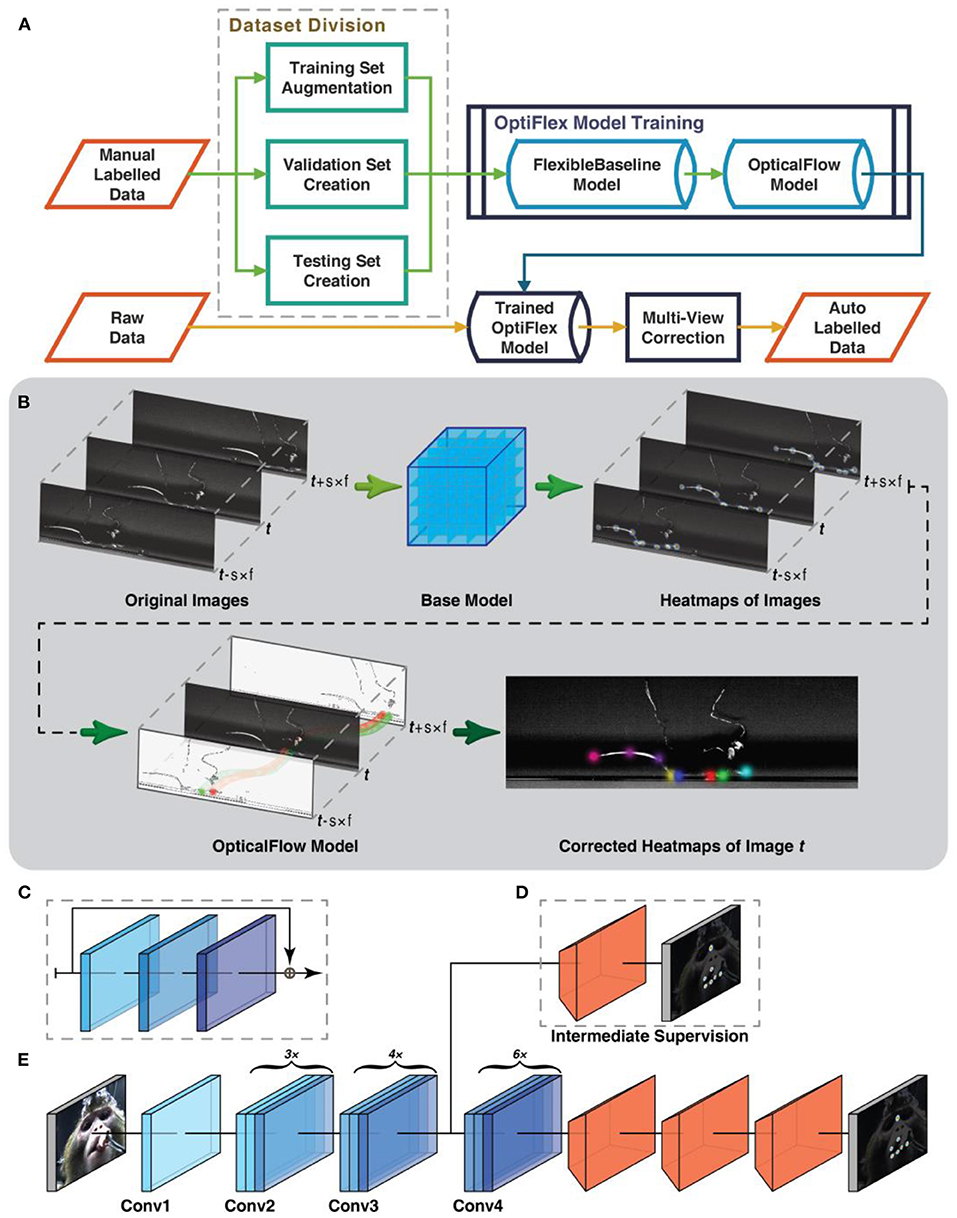

Animal pose estimation tools based on deep learning have significantly improved the quantification of animal behaviour. These tools analyze individual video frames to determine the pose of animals, but they do not consider the variations in animal body shapes when making predictions and evaluations. We have developed a new framework called OptiFlex that addresses this limitation. OptiFlex combines a flexible base model called FlexibleBaseline, which accounts for variations in animal body shape, with an OpticalFlow model that considers the temporal context from nearby video frames. By utilizing multi-view information, OptiFlex optimizes pose estimation in all four dimensions (3D space and time). We evaluated FlexibleBaseline using datasets of four different lab animal species (mouse, fruit fly, zebrafish, and monkey) and introduced a user-friendly evaluation metric called the adjusted percentage of correct key points (aPCK). Our analyses demonstrate that OptiFlex surpasses existing deep learning-based tools in terms of prediction accuracy. This highlights the potential of OptiFlex for studying various behaviours across different animal species.

General workflow

The workflow starts after obtaining video recordings of animal behaviours . A human annotator first labels a number of continuous frames, which are then preprocessed (details in Data Preprocessing section of Methods) and subsequently split into three datasets: a train set, validation set, and test set. Models presented in this work are trained with the train set and evaluated on the validation set. These evaluation results are used not only to tune the hyperparameters of the models, such as learning rate, dropout rate, number of filters in convolution layers etc., but also to determine whether more labelled data should be obtained. The model with the best performance on the validation set is chosen as the final model. For OptiFlex, this training process happens twice, once for the base model, FlexibleBaseline, and once for the OpticalFlow model. After obtaining satisfactory evaluation results on the validation set, a final evaluation can be run on the test set to quantify how the trained model generalises to new data. All of the test datasets include frames of different animals of the same type to ensure generalizability of the prediction results. This marks the end of the training process. Any new videos of animals in the same experimental configuration can now be used as inputs into the trained model and the outputs will be the videos labelled with locations of user defined key points.

Publications

Liu X, Yu SY, Flierman NA, Loyola S, Kamermans M, Hoogland TM, De Zeeuw CI. OptiFlex: Multi-Frame Animal Pose Estimation Combining Deep Learning With Optical Flow. Front Cell Neurosci. 2021 May 28;15:621252. doi: 10.3389/fncel.2021.621252. PMID: 34122011; PMCID: PMC8194069.